Before we get into this post, the first of several notes from your editor, Joey.

This is a new one for us. Not only did we ask another team member to get in here and write something, but that team member also built a really in-depth look at how their job works.

Rob leads our QA efforts, and, as we’re pushing through 0.1 launch bugs and feedback requests, we thought it’d be a great time to show you a bit of how Rob’s job works.

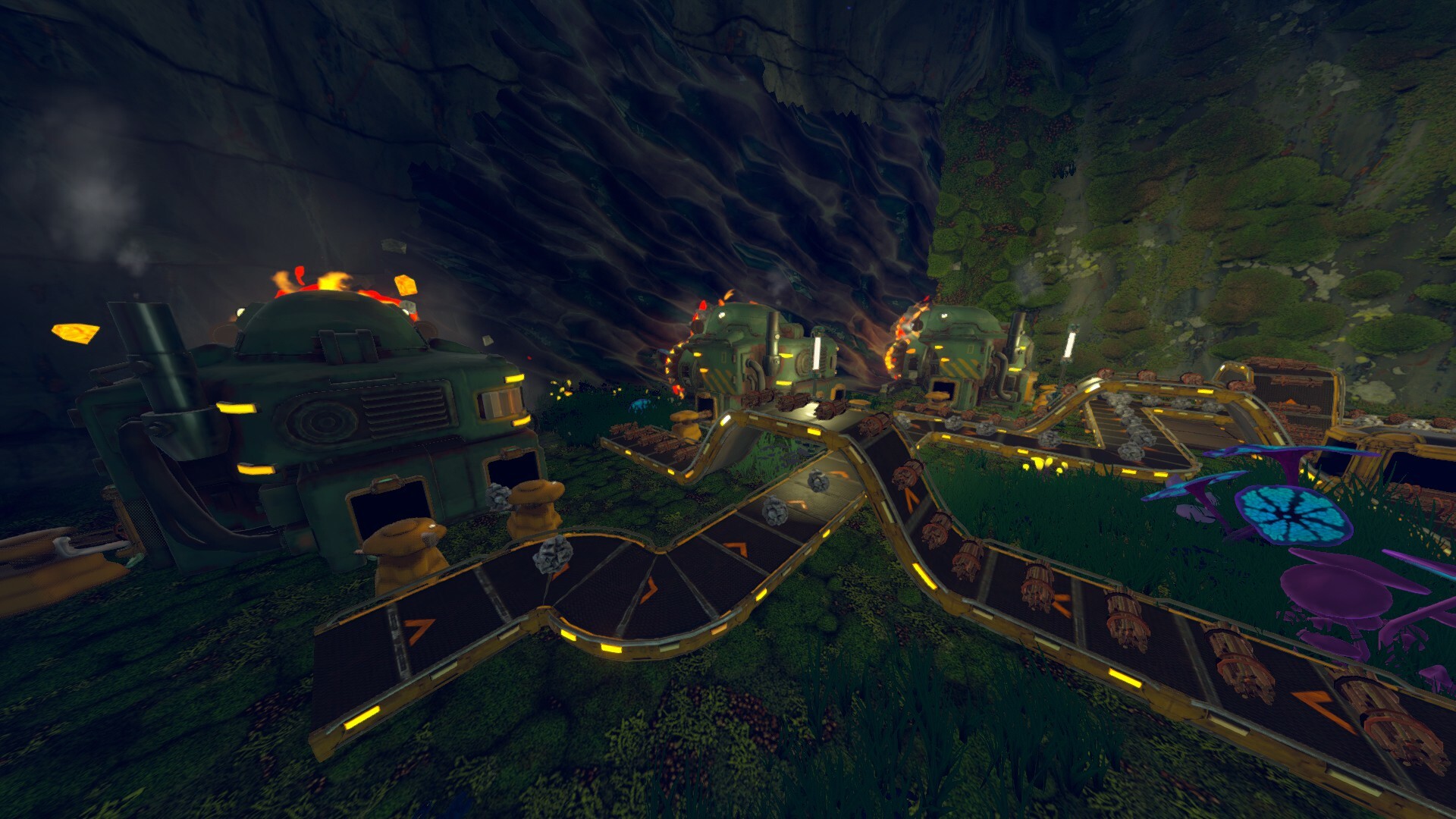

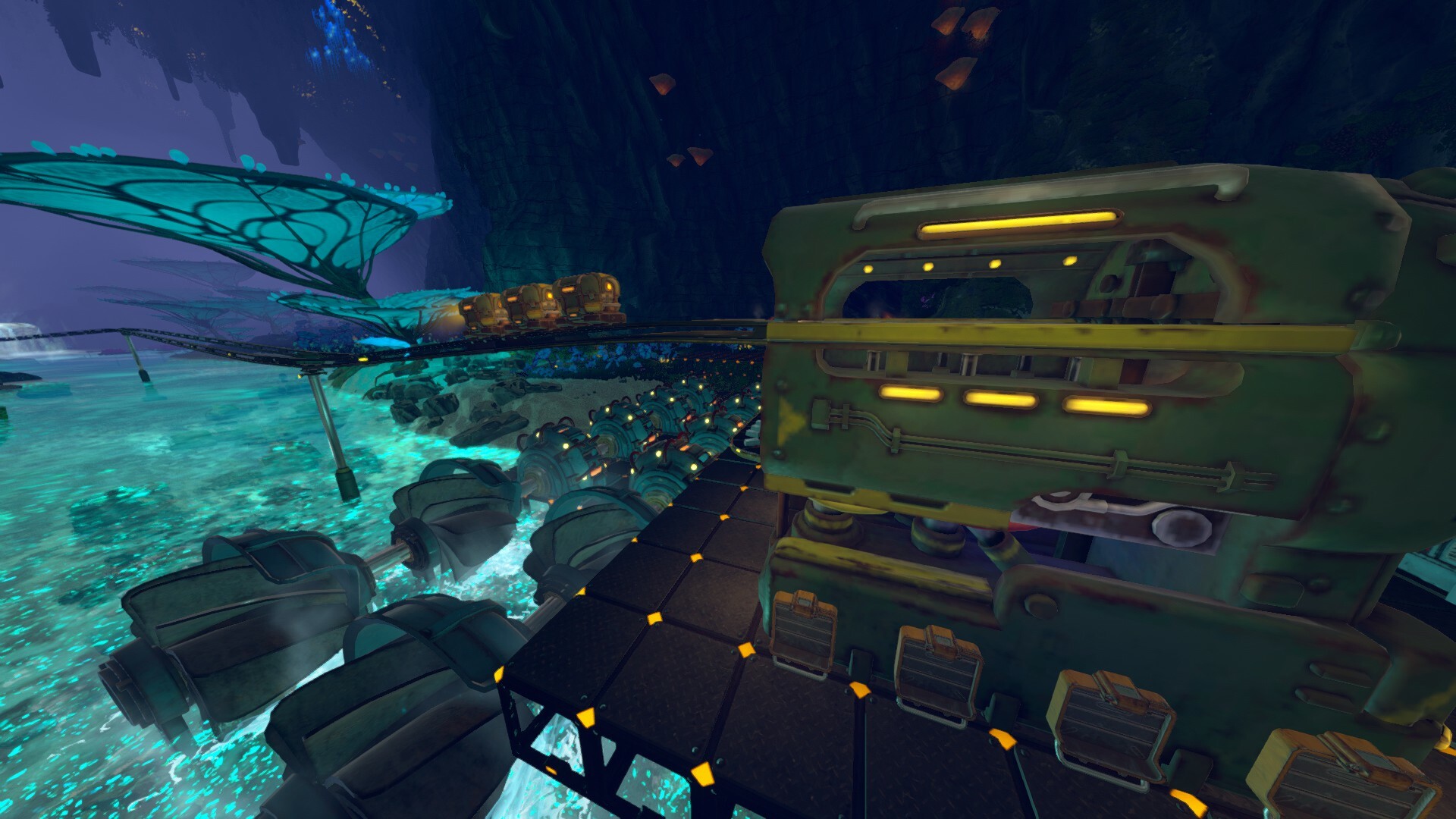

We now share this post about the QA process with you because of the Monorail bugs. Oh, the Monorail bugs. Monorail Depots and HVC cables don’t work well together right now, and this is a bug we know about and are working on. It won’t be ready for 0.1.1.

It’s not a simple fix, and the process outlined below should illustrate why that is.

What’s this week’s video for? It’s a summary of Rob’s post below. The post is great; read it if you have time. But if you’re looking for the quick hits, peep the video.

If you like this sort of post, let us know. We’ll do our best to repeat them as we roll through development.

=====

Hey, Groundbreakers!

My name is Rob, and I’m the QA Director on Techtonica.

To give you a little background on myself, I’ve been working in game development long enough that the first title I contributed to was released on the PS2. (So, yeah, I’m ancient.) I’ve been with Fire Hose Games for over six years, and I’ve been working on

Techtonica since back when it was still called “Cave Factory.”

[Editor’s Note: Techtonica was never called “Cave Factory.” Only Rob has ever called Techtonica “Cave Factory.” Stop trying to make “Cave Factory” happen, Rob. – Joey]

Before we begin, two key points

I want to talk a little bit with you all about bugs: what they are, how we go about fixing them, and some of the decisions we have to make as part of that process. But my purpose in establishing my bona fides up front is not to present myself to you as some type of infallible authority on QA testing or game development processes. Rather, it’s this: you can’t work in QA for as long as I have without learning two things:

- No matter what kind of wild stuff you have seen in the past, you can never anticipate all the ways in which video games will surprise you by finding new and exciting ways to break.

- Professional humility is essential, and making categorical statements about how software development does or doesn’t work is just a really great way to hear yourself be wrong. As Ambrose Bierce once said, to be positive about something is to be mistaken at the top of one’s voice.

With the above being said, whenever you see me make a categorical-sounding statement in this blog post (such as, “that’s the sort of bug that is easy to fix!”), please mentally append the disclaimer “most of the time, probably” to the end.

Bugs happen, regardless of how much we hate them

Whenever we release a video game, our goal is to make that game as fun as possible, and also as bug-free as possible. That’s the case with every development team I’ve ever worked with, and it’s certainly the case here at Fire Hose Games. Unfortunately, while a bug-free video game (or bug-free software of any kind, for that matter) represents the Platonic ideal for which we all strive, the unavoidable reality is more mundane, and far grubbier: bugs happen.

Despite all our fervent best wishes, and the very best efforts of a team of reasonably intelligent and unquestionably attractive developers, bugs still happen.

Bugs, like Thanos, are inevitable. (Not all bugs are purple, though. Or voiced by Josh Brolin. But some of them – presumably – are.)

To give you some sense for why bugs are inevitably going to sneak through the testing net, imagine that I spent the entire year before Techtonica’s release testing the video game for 8 hours a day, 7 days a week. (This, of course, is not entirely accurate – I took some time off on the weekends to watch “Stranger Things” and eat cheese.) But even assuming I were that lacking in a life outside work, that would mean that, at most, I could have spent about 3,000 hours testing Techtonica in the year before release. And, even then, video games change constantly during development, so a good fraction of those 3,000 hours would have been spent testing content that will never see the light of day.

Now, for the sake of choosing a round number, imagine that Techtonica gets released, and 10,000 people download it as soon as it goes live. If they all play for one hour each, then they have already logged more than three times as many hours on Techtonica as I have in the entire year prior. Math is not the QA tester’s friend: no matter how much you test the game before release, your players are going to test it even more after release. And they’re going to find things that you didn’t or couldn’t.

So, once a bug makes it out into the wild, how do we deal with it?

To begin with, we should define what I mean when I’m talking about bugs. For our purposes, a bug is any time that the game does something other than what the game developers would expect it to do in that situation. This has a couple of ramifications.

First of all, it means that every game will have plenty of bugs that are harmless or that go essentially unnoticed by players. Because the game seems to be working fine and you don’t know that we were expecting it to work differently, you probably don’t think of those things as bugs, but technically they are.

On the opposite side of the same coin, there are plenty of cases where the game may be doing exactly what we programmed it to do, but to the player that behavior feels like a bug, because what we programmed the game to do sucks.

For example, imagine that you fire up Techtonica, and you find that your Mining Drills are burning way more fuel, but you’re getting the exact same amount of ore as before. I think that pretty much anyone experiencing this game behavior would say: “That’s a bug.” (And then probably some unprintable words as well.) But, in this hypothetical, it’s possible that Tom Hanks recently came to work at Fire Hose as our new gameplay designer. And it’s possible that Tom Hanks isn’t the warm, friendly, life-affirming sort of guy that his movies would lead you to believe. Instead, it’s possible that Tom Hanks is just a mean dude, who thinks that life is suffering, and that factory / automation game players should be made to experience this most of all.

So, the first thing Tom did once he got access to the Techtonica codebase was to change the game balance so that Mining Drills consume 10 times as much fuel as before, because that’s the way he believes the game should be played. [Editor’s Note: Tom Hanks does not work at Fire Hose, and he seems like a really nice guy. He did not balance the Mining Drills, or have any other involvement with Techtonica. We cannot stress this enough: we love Tom Hanks. Do not blame him for any bad gameplay experiences. (Also, Tom, if you’re reading this, please answer my letters?) – Joey]

In this (admittedly far-fetched) case, what you’re experiencing as a player is not technically a bug. It’s the game working as expected. It just turns out that the game working as expected isn’t much fun. And that happens more frequently than you might think.

This is why both bug reports and general feedback from players are so valuable to us. Please, please, keep sending them in. But for today’s purposes, I want to focus on the bug side of things, and not general feedback. (If that distinction seems pedantic to you, then you’re going to have to forgive me. I work in QA, so I make my living from pedantry.)

What I’m saying is this: we try to reproduce your bugs

Whenever a bug gets reported to us, the first thing we have to do is to reproduce it. That is to say, we have to try to get whatever happened to you to happen to us. Unless and until we can reproduce a bug for ourselves, it is very difficult to understand what is causing that bug, and almost impossible for us to fix.

Some bugs are pretty easy to reproduce. Imagine there’s a light switch in your home, only, whenever you flip it, your light doesn’t turn on. This happens every single time you flip the switch. This is an easy bug to reproduce, and consequently it’s an easy one to start diagnosing, and then trying to fix.

But, now, imagine a different problem with your light switch. Most of the time, when you flip it on, the light turns on. Most of the time, when you flip it off, the light turns off. But sometimes – just sometimes – it doesn’t. Maybe it doesn’t work once in 100 flips. This is a much harder problem to reproduce, and, consequently, it’s going to be harder to debug and solve as well. (Not to mention that, even if you think you have probably fixed the light switch bug, it’s hard to know for sure. If it works 100 straight times, is it fixed, or is that just expected variance?)

A few of the bugs that players find in games do fit into that first, happy category. They have clear, repeatable repro steps, and, when they get brought to our attention, we are able to get to work on them fairly quickly. But a lot of bugs fall into the latter, trickier category. They don’t happen to all players, and – even for those players they do happen to – they don’t happen all the time. When those bugs turn up, our first job as QA testers is to get as much information from players as possible, and then to use that information – combined with whatever context or experience we may have – to try to reproduce the bug on our test machines.

This is why, when you report a bug on our Discord, you’ll often see me sliding into your mentions, asking you questions about what you were doing when it happened, and if you can share your save files or player logs with us. That sort of debugging information is vital, and can often be the difference between an apparently “random” bug that could take us days, weeks, or even months of methodical experimentation to pin down and reproduce, or one that we can get a handle on pretty quickly.

(I put the word “random” in scare quotes, because video games are full of variables, and “random” is a word that QA professionals hate. While some truly random bugs do exist, they are vanishingly rare, and most of what appears random to players and testers is actually the result of a specific combination of known or unknown variables, interacting in a way that is not at all obvious just from the visible outcome.)

A lot of QA work boils down to laboriously and methodically trying to isolate each of those potential variables, and test them in turn, until you happen upon the precise combination of circumstances and inputs that combine to produce a (seemingly) random bug. There are very few shortcuts in this process, and those which exist either come from being familiar with a similar issue in the past (“I’ve seen something like this before; when the machine SFX start acting up, it usually means we’ve run out of memory”), or from having access to a log file or other debug information that can give you a vital clue (“this shader error happened right around the time that the client became disconnected, so, even though it doesn’t seem like that should be connected, we ought to explore it”).

All of which is a long way of saying, please keep sending those logs. They help me remain sane, and they help us improve the game.

Great, we can reproduce it, but now what?

Once we’ve identified a bug, and have been able to repro it well enough to start assessing what is causing it, and how we might go about fixing it, that kicks off a triage process in which we try to weigh the benefits of addressing that bug against the costs and risks of doing so. We go through this process not just for bugs, but also for general feedback we receive, and for our own internal ideas for things that we want to add to (or change about) the game. Going through this process is how we prioritize all the different things the development team could be working on, and is a necessary function of the fact that we have a finite number of game developers working for a finite number of hours each day, and therefore there is a limit on just how much we can accomplish between each release. Every development team goes through this sort of process – albeit with their own lingo and quirks – and at the end of the day it is a balancing act.

To give you some insight into how those conversations go, image bug triage as a balance scale, with a “REASONS TO FIX NOW” pan on the left, and a “REASONS NOT TO FIX NOW” pan on the right. The relative weight of those two pans will affect the production decision we make about whether to prioritize fixing a bug for the next release, or whether we put it on our to-do backlog for the future.

In the left pan (reasons to prioritize a bug), we have – among others – these considerations:

- Severity

- Frequency

In the right pan (reasons to work on other bugfixes, or other game improvements instead), we have – again, this list is not exhaustive – the following factors:

- Mitigation

- Risk

- Development costs (both in direct time and effort, and in opportunity costs)

So let’s start by looking at which considerations go into the left pan, and which would make us try to prioritize fixing a bug over whatever else the dev team might be working on instead.

Bug Severity

When we talk about severity, that’s a somewhat technical way of asking: how bad is the player experience of the bug, if they encounter it? How much does it ruin their fun?

Imagine for a second a typo in the description of a machine, or a bug that makes the Mining Drills the wrong color. In the grand scheme of things, both of these bugs probably have low severity – they’re annoying, but they don’t really affect someone’s ability to play and enjoy the game. Conversely, a crash bug is the classic example of a high-severity issue, since it’s game over – literally.

But oftentimes severity isn’t quite so clear-cut, and comes down to a question of judgment. Think back to that typo in a machine description – what if, instead of a word that’s spelled wrong, it’s an incorrect number in the description of how many resources it takes to craft that machine? Now this typo looks more severe because instead of just annoying the player, it could actively mislead them, and result in a state where they can’t craft a machine and don’t understand why. It’s still not as severe as a crash bug, say, but the point is that severity is a spectrum, and where any given bug falls on that spectrum is more judgment than science.

So assigning a reasonable severity assessment to any given bug is a critical part of the triage process, and one that we may debate pretty intensely on a bug-to-bug basis. The same bug may seem like a minor annoyance to one player, and a serious fun-killer to another.

Bug Frequency

Frequency is how we talk about the likelihood that any given player will run into a bug during any given game. A bug that makes it so that all machines are broken has basically the worst possible frequency: everyone is going to encounter it every time they play. One broken machine has less frequency, but is still likely to come up a lot. A machine that works right most of the time, but doesn’t work right under certain specific conditions, or if certain other variables are present, is going to turn up less frequently than a machine that never works right.

At the extreme end of the frequency spectrum, there are bugs that we refer to as “once ever” issues – a bug that (seemingly) happens to one player, once, but that no one else seems to encounter, and that no one has been able to reproduce. That bug is still a bug, but it’s a bug with extremely low frequency.

Variables like computer hardware and network stability can also play a role in frequency, and can lead to very different player experiences of frequency for the same issue. A bug that causes network disconnects if the host is connected to a VPN, for example, may have low frequency overall – most players will never even encounter it – but for someone who has to use a VPN for their internet access, the frequency will be 100% when they try to host a game. As with severity, this is one of the reasons why assessing frequency can be more difficult than it at first seems, and why different players may have vastly different experiences with the same bug depending on their individual circumstances, play styles, and just plain old-fashioned luck.

Now, again, this is more art than science, and these judgments are frequently subjective. But, as a rough guideline, you can imagine multiplying a bug’s severity by its frequency, and that will give you a rough sense of how much impact the bug has for the player base as a whole. We want to fix high-severity bugs before low-severity bugs, and we want to fix high-frequency bugs before low-frequency bugs. Bugs that have both high severity and high frequency are the sort of bugs we want to deal with as quickly as possible, and coincidentally they are the sorts of bugs that keep me (and other QA professionals) awake at night.

Bug Mitigation

But there are other factors we consider in triage that may lead us to conclude that fixing a bug – even a really bad bug – isn’t the best way to spend our limited development bandwidth. Which brings me to the other side of our imaginary triage balance, and the factors that might lead us to prioritize a particular bug lower on the development backlog.

When I talk about mitigation in this context, I’m talking about: are there steps that players can take that will allow them to avoid this bug, or to minimize its impact, even if we aren’t yet able to fix it? Sometimes, a bug will have straightforward and effective mitigation steps that we can suggest to players. For example, if there is a place in one of the ANEXCAL facilities where the colliders are off, and players can get stuck on geometry, we can post a message for players in the Discord saying, “Hey, for now, try to avoid going into this particular area. And, if you do get stuck there, just use the Respawn option, and that will get you unstuck.” This is an example of the kind of bug where mitigation can be very effective, and can be a good short-term strategy until we are able to fix the underlying issue.

(To be clear, we still want to fix these bugs eventually, but bugs with meaningful mitigation steps are usually ones that we will prioritize lower than bugs we can’t offer workarounds for.)

Bug Risk

Risk is one of the biggest factors in bug triage, and it comes in a couple different forms. On one level, when we’re assessing a bug, one of the questions we ask is: what is the risk that the change we implement won’t actually fix the issue? For bugs that touch on complex systems – particularly if their underlying causes are not fully known – there’s always the chance that the thing you think will fix the problem won’t. (Or, more likely, it will fix some subset of cases where the bug occurs, but some other failure cases will slip through the net.)

But there’s another element of risk assessment as well, and this one is often the most complex aspect of triage: what are the chances that whatever we do to fix this bug will cause other bugs as a result? Software can be highly complex, and is composed of many different interdependent systems that can interact with each other in myriad ways – some obvious, some subtle, and some completely unexpected unless or until something goes wrong. Even for a really bad bug that we really want to fix, we have to weigh the possibility that fixing that bug might break something else. And if a release deadline is coming up, our inability to find and mitigate those unintended consequences may mean that discretion becomes the better part of valor.

To go back to our light switch analogy from before, imagine that the reason that the light doesn’t turn on is that you have a burnt-out bulb. In QA terms, this would be a pretty low-risk fix. You can change the bulb, which should fix the problem, and the chances that it will cause other problems are awfully low. This is the sort of simple, isolated, low-risk bugfix that we can try to turn around quickly.

But imagine, now, that the problem isn’t the bulb. Imagine that the problem is a fault deep in the electrical wiring in your home. And imagine that, to fix that one busted light, you’re going to have to switch off the power, cut into the wall, pull out a bunch of wires, and then put them back together again. This is now a much riskier fix, and the chance that you might break something else while fixing your broken light is a possibility that has to be considered, and weighted appropriately. When it comes to game bugs, these sorts of solutions that require us to touch a lot of different game systems, or to tweak a lot of different variables, are the sorts of things that we want to undertake cautiously, and with as much time for testing and iteration as possible.

Many years ago, when I worked on The Beatles: Rock Band, one of the senior producers on the team printed up posters with pictures of Paul McCartney on them, pointing at the viewer. Below that they added the caption: “Don’t Fuck This Up.” (One journalist wrote an article about that game, and felt compelled to protect the delicate sensibilities of their readers, so they amended the poster’s commandment to the somewhat milder “Don’t Foul This Up.”) Anyway, I think about that poster all the time when we’re doing risk assessment. I always want to fix bugs, but I also want to make sure we don’t foul up anything that is already working.

Development costs; time, effort, and lost opportunity

The last thing we’ll consider is the development cost of fixing a particular bug – not just in terms of the time it would take to devise, implement, and test the fix itself, but in terms of opportunity cost as well. Time spent fixing one bug is time not spent fixing another. It’s also time not spent working on player requests, and building all the additional content that we really want to bring to Techtonica. Time is fleeting, and time is finite. Any bug we move up our list of priorities means that some other bug or feature has to move down to make space.

So, all other factors being equal, a bug that we think we can fix in one day will end up getting prioritized above a similarly bad bug that might take two. And particularly complex bugs with particularly demanding fixes have to be balanced against all the other feature development work that we could do with the same amount of time. It’s not a simple balancing act, but it’s one that we take seriously, and the question that guides us is always the same: at the end of the day, what will create the best experience for players?

So, returning to our imaginary scale, that’s the process we go through as we evaluate each bug: how bad and how frequent is it on the one side, and how difficult and risky to fix is it on the other?

About those Monorail bugs

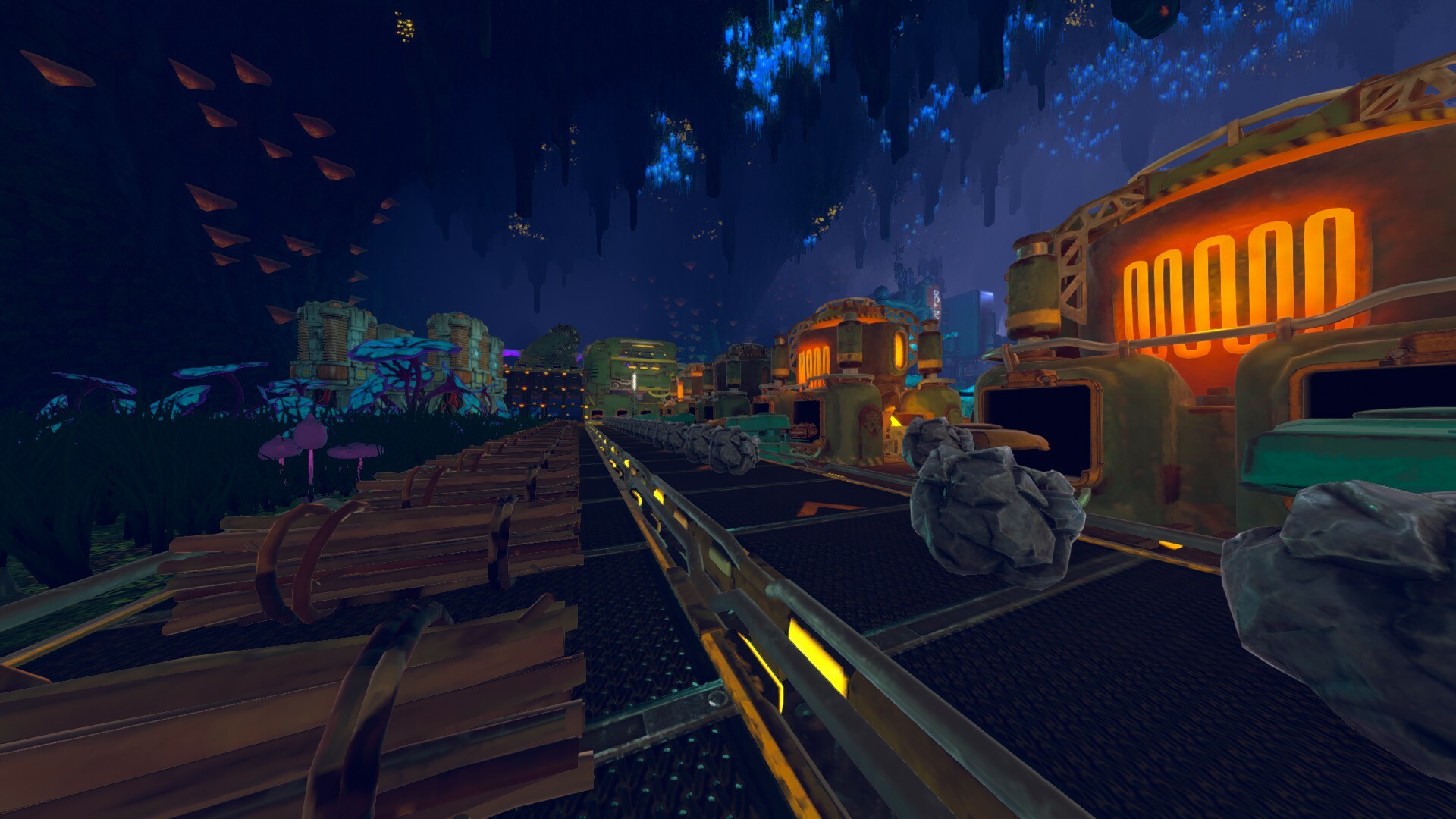

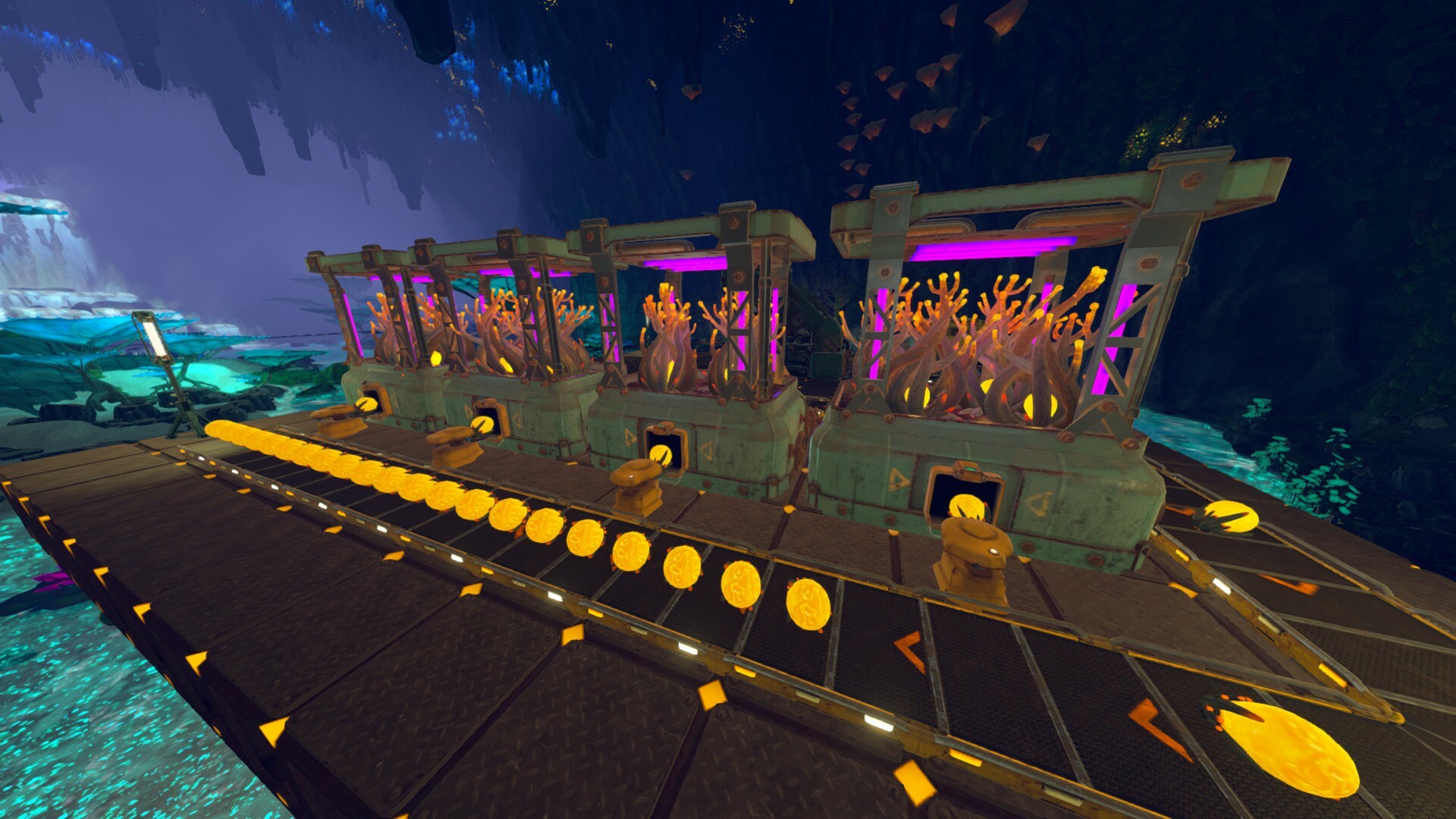

To use an example of a real problem, we currently have a bug in Techtonica (or, more accurately, we have a set of interrelated bugs) which make it so that Monorail systems don’t always play nice with power networks. The result is that Monorail Depots sometimes act as though they don’t have enough power, when in fact they should.

Players have given us lots of useful information about these bugs, and we’ve started to get a handle on what the underlying causes are. This is definitely an annoying issue to run into, since it happens at a stage of the game where you have probably built a kickass factory that you want to automate with cool trains, and having those trains not work the way they’re supposed to is a serious feel-bad.

Not all players will encounter these bugs, because they only happen for some configurations of transit and power systems, particularly those using HVC cables to power Depots on different power networks. But for players who do run into this bug, it’s a letdown, and the mitigation steps to resolve it involve re-configuring your rail and/or power networks, which is (to put it nicely) a pain in the butt. So these are bugs we are eager to fix.

The trouble is, they are also bugs that are complex and risky to fix. They require making changes to code that sits at the nexus between one complex game system (transit networks) and another complex game system (power networks), and which touches a whole lot of other game functions besides. In order to get in there and fix this stuff, we’re going to have to cut open the walls, and redo a lot of the wiring, to harken back to my analogy from before. And doing that properly is going to take a lot of time, and a lot of testing. I want to fix these Monorail bugs, but I also don’t want to foul transit or power (or Macca knows what else) up.

And so, having looked hard at these Monorail bugs, we made the tough call that we’re not going to have them fixed for our 0.1.1 update. We’re going to use that time to work on other bugfixes and community requests instead, so that we can make Techtonica better in other ways right now. We’ll come back to Monorails at a later date, when we know we can do those bugfixes right, and not break other stuff in the process.

That’s the sort of bug triage decision that never makes anyone happy, and isn’t any fun to explain.

The reason I wanted to write this blog post is to let you all know that there is a method behind the madness, and that just because we may not fix the bug that bothers you most in any given release, it isn’t because we aren’t listening, and it isn’t because we don’t want to. We want to fix all the bugs. But we also want to keep the game stable, and to deliver cool new features.

It all goes back to that balancing act, and we do the best that we can to make the right calls, and to be as transparent as we can about the thinking behind them.

So please keep sending us your logs and letting us know what you think. That information helps us to make better decisions, and Techtonica is all the better for it.